Model Management Guidance

Definitions and Interpretations

The following terms shall have the meaning assigned to them for the purpose of interpreting these Standards and the related Guidance:

1. Board: As defined in the CBUAE’s Corporate Governance Regulation for Banks.

2.Causality:

(written in lower case as “causality”): Relationship between cause and effect. It is the influence of one event on the occurrence of another event.

3.CBUAE: Central Bank of the United Arab Emirates.

4.Correlation

(written in lower case as “correlation”): Any statistical relationship between two variables, without explicit causality explaining the observed joint behaviours. Several metrics exist to capture this relationship. Amongst others, linear correlations are often captured by the Pearson coefficient. Linear or non-linear correlation are often captured by the Spearman’s rank correlation coefficient.

5.Correlation Analysis:

(written in lower case as “correlation analysis”): Correlation analysis refers to a process by which the relationships between variables are explored. For a given set of data and variables, observe (i) the statistical properties of each variable independently, (ii) the relationship between the dependent variable and each of the independent variables on a bilateral basis, and (iii) the relationship between the independent variables with each other.

6.CI:

(Credit Index): In the context of credit modelling, a credit index is a quantity defined over (-∞,+∞) derived from observable default rates, for instance through probit transformation. CI represents a systemic driver of creditworthiness. While this index is synthetic, (an abstract driver), it is often assimilated to the creditworthiness of specific industry or geography.

7.Default:

(written in lower case as “default”): The definition of default depends on the modelling context, either for the development of rating models or for the calibration and probabilities of default. For a comprehensive definition, refer to the section on rating models in the MMG.

8.Deterministic Model:

(written in lower case as “deterministic model”): A deterministic model is a mathematical construction linking, with certainty, one or several dependent variables, to one or several independent variables. Deterministic models are different from statistical models. The concept of confidence interval does not apply to deterministic models. Examples of deterministic models include NPV models, financial cash flow models or exposure models for amortizing facilities.

9.DMF:

(Data Management Framework): Set of policies, procedures and systems designed to organise and structure the management of data employed for modelling.

10.DPD:

(Days-Past-Due): A payment is considered past due if it has not been made by its contractual due date. The days-past-due is the number of days that a payment is past its due date, i.e. the number of days for which a payment is late.

11.DSIB:

(Domestic Systemically Important Banks): These are UAE banks deemed sufficiently large and interconnected to warrant the application of additional regulatory requirements. The identification of the institutions is based upon a framework defined by the CBUAE.

12.EAD:

(Exposure At Default): Expected exposure of an institution towards an obligor (or a facility) upon a future default of this obligor (or its facility). It also refers to the observed exposure upon the realised default of an obligor (or a facility). This amount materialises at the default date and can be uncertain at reporting dates prior to the default date. The uncertainty surrounding EAD depends on the type of exposure and the possibility of future drawings. In the case of a lending facility with a pre-agreed amortisation schedule, the EAD is known. In the case of off-balance sheet exposures such as credit cards, guarantees, working capital facilities or derivatives, the EAD is not certain on the date of measurement and should be estimated with statistical models.

13.EAR: (Earning At Risk): Refer to NII.

14.ECL:

(Expected Credit Loss): Metric supporting the estimation of provisions under IFRS9 to cover credit risk arising from facilities and bonds in the banking book. It is designed as a probability-weighted expected loss.

15.Economic Intuition:

(written in lower case as “economic intuition”): Also referred to as economic intuition and business sense. Property of a model and its output to be interpreted in terms and metrics that are commonly employed for business and risk decisions. It also refers to the property of the model variables and the model outputs to meet the intuition of experts and practitioners, in such a way that the model can be explained and used to support decision-making.

16.Effective Challenge:

Characteristic of a validation process. An effective model validation ensures that model defects are suitably identified, discussed and addressed in a timely fashion. Effectiveness is achieved via certain key features of the validation process such as independence, expertise, clear reporting and prompt action from the development team.

17. EVE :

(Economic Value of Equity): It is defined as the difference between the present value of the institution’s assets minus the present value of liabilities. The EVE is sensitive to changes in interest rates. It is used in the measurement of interest rate risk in the banking book.

18.Expert-Based Models:

(written in lower case as “expert-based models”): Also referred to as judgmental models, these models rely on the subjective judgement of expert individuals rather than on quantitative data. In particular, this type of model is used to issue subjective scores in order to rank corporate clients.

19.Institutions:

(written in lower case as “institution(s)”): All banks licensed by the CBUAE. Entities that take deposits from individuals and/or corporations, while simultaneously issuing loans or capital market securities.

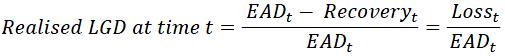

20.LGD :

(Loss Given Default): Estimation of the potential loss incurred by a lending institution upon the default of an obligor (or a facility), measured as a percentage of the EAD. It also refers to the actual loss incurred upon past defaults also expressed as a percentage of EAD. The observed LGD levels tend to be related to PD levels with various strength of correlation.

21.Limits and limitations:

(written in lower case as “limits” and “limitations”): Model limits are thresholds applied to a model’s outputs and/or its parameters in order to control its performance. Model limitations are boundary conditions beyond which the model ceases to be accurate.

22.LSI :

(Large and/or Sophisticated Institutions): This group comprises DSIBs and any other institutions that are deemed large and/or with mature processes and skills. This categorisation is defined dynamically based on the outcome of regular banking supervision.

23.Macroeconomic Model:

(written in lower case as “macroeconomic model” or “macro model”): Refers to two types of models. (i) A model that links a set of independent macro variables to another single dependent macro variable or to several other dependent macro variables or (ii) a model that links a set of independent macro variables to a risk metric (or a set of risk metrics) such as probabilities of default or any other business metric such as revenues.

24.Market Data:

Refers to the various data attributes of a traded financial instrument reported by a trading exchange. It includes the quoted value of the instrument and/or the quoted parameters of that instrument that allow the derivation of its value. It also includes transaction information including the volume exchanged and the bid-ask spread.

25.Materiality:

The materiality of a model represents the financial scope covered by the model in the context of a given institution. It can be used to estimate the potential loss arising from model uncertainty (see Model Risk). Model materiality can be captured by various metrics depending on model types. Typically, total exposure can be used as a metric for credit models.

26.MMG: CBUAE’s Model Management Guidance.

27.MMS: CBUAE’s Model Management Standards.

28.Model:

(written in lower case as “model”): A quantitative method, system, or approach that applies statistical, economic, financial, or mathematical theories, techniques, and assumptions to process input data into quantitative estimates. For the purpose of the MMS and MSG, models are categorised in to three distinct groups: statistical models, deterministic models and expert-based models.

29.Model Calibration:

(written in lower case as “model calibration”): Key step of the model development process. Model calibration means changing the values of the parameters and/or the weights of a model, without changing the structure of the model, i.e. without changing the nature of the variables and their transformations.

30.Model Complexity:

(written in lower case as “model complexity”): Overall characteristic of a model reflecting the degree of ease (versus difficulty) with which one can understand the model conceptual framework, its practical design, calibration and usage. Amongst other things, such complexity is driven by, the number of inputs, the interactions between variables, the dependency with other models, the model mathematical concepts and their implementation.

31.Model Construction:

(written in lower case as “model construction”): Key step of the model development process. The construction of a model depends on its nature, i.e. statistical or deterministic. For the purpose of the MMS and the MMG, model construction means the following: for statistical models, for a given methodology and a set of data and transformed variables, it means estimating and choosing, with a degree of confidence, the number and nature of the dependent variables along with their associated weights or coefficients. For deterministic models, for a given methodology, it means establishing the relationship between a set of input variables and an output variable, without statistical confidence intervals.

32.Model Development: (written in lower case as “model development”): Means creating a model by making a set of sequential and recursive decisions according to the steps outlined in the dedicated sections of the MMS. Model re-development means conducting the model development steps again with the intention to replace an existing model. The replacement may, or may not, occur upon re-development.

33.Modelling Decision:

(written in lower case as “modelling decision”): A modelling decision is a deliberate choice that determines the core functionality and output of a model. Modelling decisions relate to each of the steps of the data acquisition, the development and the implementation phase. In particular, modelling decisions relate to (i) the choice of data, (ii) the analysis of data and sampling techniques, (iii) the methodology, (iv) the calibration and (v) the implementation of models. Some modelling decisions are more material than others. Key modelling decisions refer to decisions with strategic implications and/or with material consequences on the model outputs.

34.Model Risk:

Potential loss faced by institutions from making decisions based on inaccurate or erroneous outputs of models due to errors in the development, the implementation or the inappropriate usage of such models. Losses incurred from Model Risk should be understood in the broad sense as Model Risk has multiple sources. This definition includes direct quantifiable financial loss but also any adverse consequences on the ability of the institution to conduct its activities as originally intended, such as reputational damage, opportunity costs or underestimation of capital. In the context of the MMS and the MMG, Model Risk for a given model should be regarded as the combination of its materiality and the uncertainty surrounding its results.

35.Model Selection:

(written in lower case as “model selection”): This step is part of the development process. This means choosing a specific model amongst a pool of available models, each with a different set of variables and parameters.

36.Model Uncertainty:

(written in lower case as “model uncertainty”): This refers to the uncertainty surrounding the results generated by a model. Such uncertainty can be quantified as a confidence interval around the model output values. It is used as a component to estimate Model Risk.

37.Multivariate Analysis:

(written in lower case as “multivariate analysis”): For a given set of data and variables, this is a process of observing the joint distribution of the dependent and independent variables together and drawing conclusions regarding their degree of correlation and causality.

38.NII:

(Net Interest Income): To simplify notations, both Net Interest Income (for conventional products) and/or Net Profit Income (for Islamic Products) are referred to as “NII”. In this context, ‘profit’ is assimilated as interest. It is defined as the difference between total interest income and total interest expense, over a specific time horizon and taking into account hedging. The change in NII (“∆NII”) is defined as the difference between the NII estimated with stressed interest rates under various scenarios, minus the NII estimated with the interest rates as of the portfolio reporting date. ∆NII is also referred to as earnings at risk (“EAR”).

39.NPV :

(Net Present Value): Present value of future cash flows minus the initial investment, i.e. the amount that a rational investor is willing to pay today in exchange for receiving these cash flows in the future. NPV is estimated through a discounting method. It is commonly used to estimate various metrics for the purpose of financial accounting, risk management and business decisions.

40.PD:

(Probability of Default): Probability that an obligor fails to meet its contractual obligation under the terms of an agreed financing contract. Such probability is computed over a given horizon, typically 12 months, in which case it is referred to as a 1-year PD. It can also be computed over longer horizons. This probability can also be defined at several levels of granularity, including, but not limited to, single facility, pool of facilities, obligor, or consolidated group level.

41.PD Model:

(written as “PD model”): This terminology refers to a wide variety of models with several objectives. Amongst other things, these models include mapping methods from scores generated by rating models onto probability of defaults. They also include models employed to estimate the PD or the PD term structure of facilities, clients or pool of clients.

42.PD Term Structure:

(written as “PD term structure”): Refers to the probability of default over several time horizons, for instance 2 years, 5 years or 10 years. A distinction is made between the cumulative PD and the marginal PD. The cumulative PD is the total probability of default of the obligor over a given horizon. The marginal PD is the probability of default between two dates in the future, provided that the obligor has survived until the first date.

43.PIT (Point-In-Time) and TTC (Through-The-Cycle):

A point-in-time assessment refers to the value of a metric (typically PD or LGD) that incorporates the current economic conditions. This contrasts with a through-the-cycle assessment that refers to the value of the same metric across a period covering one or several economic cycles.

44.Qualitative validation:

A review of model conceptual soundness, design, documentation, and development and implementation process.

45.Quantitative validation:

A review of model numerical output, covering at least its accuracy, degree of conservatism, stability, robustness and sensitivity.

46.Rating/Scoring:

(written in lower case “rating or scoring”): For the purpose of the MMS and the MMG, a rating and a score are considered as the same concept, i.e. an ordinal quantity representing the relative creditworthiness of an obligor (or a facility) on a predefined scale. ‘Ratings’ are commonly used in the context of corporate assessments whilst ‘scores’ are used for retail client assessments.

47.Restructuring:

(written in lower case “restructuring”): The definition of restructuring / rescheduling used for modelling in the context of the MMS and MMG should be understood as the definition provided in the dedicated CBUAE regulation and, in particular, in the Circular 28/2010 on the classification of loans, with subsequent amendments to this Circular and any new CBUAE regulation on this topic.

48.Rating Model:

(written in lower case “rating model”): The objective of such model is to discriminate ex-ante between performing clients and potentially non-performing clients. Such models generally produce a score along an arbitrary scale reflecting client creditworthiness. This score can subsequently mapped to a probability of default. However, rating models should not be confused with PD models.

49.Retail Clients:

(written in lower case as “retail clients”): Retail clients refer to individuals to whom credit facilities are granted for the following purpose: personal consumer credit facilities, auto credit facilities, overdraft and credit cards, refinanced government housing credit facilities, other housing credit facilities, credit facilities against shares to individuals. It also includes small business credit facilities for which the credit risk is managed using similar methods as applied for personal credit facilities.

50.Segment:

(written in lower case as “segment”): Subsets of an institution’s portfolio obtained by splitting the portfolio by the most relevant dimensions which explain its risk profile. Typical dimensions include obligor size, industries, geographies, ratings, product types, tenor and currency of exposure. Segmentation choices are key drivers of modelling accuracy and robustness.

51.Senior Management: As defined in the CBUAE’s Corporate Governance Regulation for Banks.

52.Statistical Model:

(written in lower case as “statistical model”): A statistical model is a mathematical construction achieved by the application of statistical techniques to samples of data. The model links one or several dependent variables to one or several independent variables. The objective of such a model is to predict, with a confidence interval, the values of the dependent variables given certain values of the independent variables. Examples of statistical models include rating models or value-at-risk (VaR) models. Statistical models are different from deterministic models. By construction, statistical models always include a degree of Model Risk.

53.Tiers: Models are allocated to different groups, or Tiers, depending on their associated Model Risk.

54.Time series analysis:

(written in lower case as “time series analysis”): For a given set of data and variables, this is a process of observing the behaviour of these variables through time. This can be done by considering each variable individually or by considering the joint pattern of the variables together.

55.UAT:

(User Acceptance Testing): Phase of the implementation process during which users rigorously test the functionalities, robustness, accuracy and reliability of a system containing a new model before releasing it into production.

56.Variable Transformation:

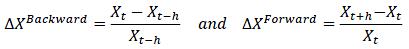

(written in lower case as “variable transformation”): Step of the modelling process involving a transformation of the model inputs before developing a model. Amongst others, common transformations include (i) relative or absolute differencing between variables, (ii) logarithmic scaling, (iii) relative or absolute time change, (iv) ranking, (v) lagging, and (vi) logistic or probit transformation.

57.Wholesale Clients:

(written in lower case as “wholesale clients”): Wholesale clients refer to any client that is not considered as a retail client as per the definition of these Standards.

1 Context and Objective

1.1 Regulatory Context

1.1.1

The Risk Management Regulation (Circular No. 153/2018) issued by the Central Bank of the UAE (“CBUAE”) on 27th May 2018 states that banks must have robust systems and tools to assess and measure risks.

1.1.2

To set out modelling requirements for licensed banks, the CBUAE has issued Model Management Standards (“MMS”) and Model Management Guidance (“MMG”). Both MMS and MMG should be read jointly as they constitute a consistent set of requirements and guidance, as follows:

(i)

The MMS outline general standards applicable to all models and constitute minimum requirements that must be met by UAE banks.

(ii)

The MMG expands on technical aspects that are expected to be implemented by UAE banks for certain types of models. Given the wide range of models and the complexity of some, the CBUAE recognises that alternative approaches can be envisaged on specific technical points. Whilst this MMG neither constitutes additional legislation or regulation nor replaces or supersedes any legal or regulatory requirements or statutory obligations, deviations from the MMG should be clearly justified and will be subject to CBUAE supervisory review.

1.2 Objectives

1.2.1

Both the MMS and MMG share three key objectives. The first objective is to ensure that models employed by UAE banks meet quality standards to adequately support decision-making and reduce Model Risk. The second objective is to improve the homogeneity of model management across UAE banks. The third objective is to mitigate the risk of potential underestimation of provisions and capital across UAE banks.

1.2.2

The MMG outlines techniques based on commonly accepted practices by practitioners and academics, internationally. The majority of its content has been subject to consultation with numerous subject matter experts in the UAE and therefore it also reflects expected practices amongst UAE institutions.

1.3 Document Structure

1.3.1

Each section of the MMG addresses a different type of model. The MMG is constructed in such a way that the numbering of each article is sequentially and each article is a unique reference across the entire MMG.

1.3.2

Both the MMS and the MMG contain an appendix summarising the main numerical limits included throughout each document respectively. Such summary is expected to ease the implementation and monitoring of these limits by both institution and the CBUAE.

1.4 Scope of Application

1.4.1

The MMG applies to all licensed banks in the UAE, which are referred to herein as “institutions”.

1.4.2

The scope of institutions is consistent across the MMS and the MMG. Details about the scope of institutions are available in the MMS.

1.4.3

Branches or subsidiaries of foreign institutions should apply the most conservative practices between the MMG and the expectations from their parent company’s regulator.

1.4.4

Institutions with a parent company incorporated in the UAE should ensure that all their branches and subsidiaries comply with the MMG.

1.5 Requirements and Timeframe

1.6 Scope of Models

1.6.1

The MMG focuses on the main credit risk models entering the computation of the Expected Credit Loss in the context of the current accounting requirements, due to their materiality and their relevance across the vast majority of institutions. The MMG also provides guidance on other models used for the assessment of interest rate risk in the banking book and net present values.

1.6.2

The MMG does not impose the use of these models. The MMG outlines minimum expected practices if institutions decide to use such models, in order to manage Model Risk appropriately.

1.6.3

As model management matures across UAE institutions, additional model types may be included in the scope of the MMG in subsequent publications.

Table 1: List of model types covered in the MMG

Model type covered in the MMG Rating Models PD Models LGD Models Macro Models Interest Rate Risk In the Banking Book Models Net Present Value Models 2 Rating Models

2.1 Scope

2.1.1

The vast majority of institutions employ rating models to assess the credit worthiness of their obligors. Rating models provide essential metrics used as foundations to multiple core processes within institutions. Ratings have implications for key decisions, including but not limited to, risk management, provisioning, pricing, capital allocation and Pillar II capital assessment. Institutions should pay particular attention to the quality of their rating models and subsequent PD models, presented in the next section.

2.1.2

Inadequate rating models can result in material financial impacts due to a potentially incorrect estimation of credit risk. The CBUAE will pay particular attention to suitability of the design and calibration of rating and PD models. Rating models that are continuously underperforming even after several recalibrations should be replaced. These models should no longer be used for decision making and reporting.

2.1.3

For the purpose of the MMG, a rating and a score should be considered as identical concepts, that is a numerical quantity without units representing the relative creditworthiness of an obligor or a facility on predefined scale. The main objective of rating models is to segregate obligors (or facilities) that are likely to perform under their current contractual obligations from the ones that are unlikely to perform, given a set of information available at the rating assessment date.

2.1.4

The construction of rating models is well documented by practitioners and in academic literature. Therefore, it is not the objective of this section to elaborate on the details of modelling techniques. Rather, this section focuses on minimum expected practices and the challenging points that should attract institutions’ attention.

2.2 Governance and Strategy

2.2.1

The management of rating models should follow all the steps of the model life-cycle articulated in the MMS. The concept of model ownership and independent validation is particularly relevant to rating models due to their direct business implications.

2.2.2

It is highly recommended that institutions develop rating models internally based on their own data. However, in certain circumstances such as for low default portfolios, institutions may rely on the support from third party providers. This support can take several forms that are presented below through simplified categorisation. In all cases, the management and calibration of models should remain the responsibility of institutions. Consequently, institutions should define, articulate and justify their preferred type of modelling strategy surrounding rating models. This strategy will have material implications on the quality, accuracy and reliability of the outputs.

2.2.3

The choice of strategy has a material impact on the methodology employed. Under all circumstances, institutions remain accountable for the modelling choices embedded in their rating models and their respective calibrations.

2.2.4

Various combinations of third party contributions exist. These can be articulated around the supplier’s contribution to the model development, the IT system solution and/or the data for the purpose of calibration. Simplified categories are presented hereby, for the purpose of establishing minimum expected practices:

(i)

Type 1 – Support for modelling: A third party consultant is employed to build a rating model based on the institution’s own data. The IT infrastructure is fully developed internally. In this case, institutions should work in conjunction with consultants to ensure that sufficient modelling knowledge is retained internally. Institutions should ensure that the modelling process and the documentation are compliant with the principles articulated in the MMS. (ii)

Type 2 – Support for modelling and infrastructure: A third party consultant provides a model embedded in a software that is calibrated based on the institution’s data. In this case, the institution has less control over the design of the rating model. The constraints of such approach are as follows:

a.

Institutions should ensure that they understand the modelling approach being provided to them. b.

Institutions should fully assess the risks related to using a system solution provided by external parties. At a minimum, this assessment should be made in terms of performance, system security and stability. c.

Institutions should ensure that a comprehensive set of data is archived in order to perform validations once the model is implemented. This data should cover both the financial and non-financial characteristics of obligors and the performance data generated by the model. The data should be stored at a granular level, i.e. at a factor level, in order to fully assess the performance of the model.

(iii)

Type 3 – Support of modelling, infrastructure and data: In addition to Type 2 support, a third party consultant provides data and/or a ready-made calibration. This is the weakest form of control by institutions. For such models, the institution should demonstrate that additional control and validation are implemented in order to reduce Model Risk. Immediately after the model implementation, the institution should start collecting internal data (where possible) to support the validation process. Such validation could result in a material shift in obligors’ rating and lead to financial implications. (iv)

Type 4 – Various supports: In the case of various supports, the minimum expected practices are as follows:

a.

If a third party provides modelling services, institutions should ensure that sufficient knowledge is retained internally. b.

If a third party provides software solutions, institutions should ensure that they have sufficient controls over parameters and that they archive data appropriately. c.

If a third party provides data for calibration, institutions should take the necessary steps to collect internal data in accordance with the data management framework articulated in the MMS.

2.2.5

In conjunction with the choice of modelling strategy, institutions should also articulate their modelling method of rating models. A range of possible approaches can be envisaged between two distinct categories: (i) data-driven statistical models that can rely on both quantitative and qualitative (subjective) factors, or (ii) expert-based models that rely only on views from experienced individuals without the use of statistical data. Between these two categories, a range of options exist. Institutions should consciously articulate the rationale for their modelling approach.

2.2.6

Institutions should aim to avoid purely expert based models, i.e. models with no data inputs. Purely expert-based models should be regarded as the weakest form of models and therefore should be seen as the least preferable option. If the portfolio rated by such a model represents more than 10% of the institution’s loan book (other than facilities granted to governments and financial institutions), then the institution should demonstrate that additional control and validation are implemented in order to reduce Model Risk. It should also ensure that Senior Management and the Board are aware of the uncertainty arising from such model. Immediately after the model implementation, the institution should start collecting internal data to support the validation process.

2.3 Data Collection and Analysis

2.3.1

Institutions should manage and collect data for rating models, in compliance with the MMS. The data collection, cleaning and filtering should be fully documented in such way that it can be traced by any third party.

2.3.2

A rigorous process for data collection is expected. The type of support strategy presented in earlier sections has no implications on the need to collect data for modelling and validation.

2.3.3

For the development of rating models, the data set should include, at a minimum, (i) the characteristics of the obligors and (ii) their performance, i.e. whether they were flagged as default. For each rating model, the number of default events included in the data sample should be sufficiently large to permit the development of a robust model. This minimum number of defaults will depend on business segments and institutions should demonstrate that this minimum number is adequate. If the number of defaults is too small, alternative approaches should be considered.

2.3.4

At a minimum, institutions should ensure that the following components of the data management process are documented. These components should be included in the scope of validation of rating models.

(i) Analysis of data sources, (ii) Time period covered, (iii) Descriptive statistics about the extracted data, (iv) Performing and non-performing exposures, (v) Quality of the financial statements collected, (vi) Lag of financial statements, (vii) Exclusions and filters, and (viii)

Final number of performing and defaulted obligors by period.

2.4 Segmentation

2.4.1

Segmentation means splitting a statistical sample into several groups in order to improve the accuracy of modelling. This concept applies to any population of products or customers. The choice of portfolio, customer and/or product segmentation has a material impact on the quality of rating models. Generally, the behavioural characteristics of obligors and associated default rates depend on their industry and size (for wholesale portfolios) and on product types (for retail portfolios). Consequently, institutions should thoroughly justify the segmentation of their rating models as part of the development process.

2.4.2

The characteristics of obligors and/or products should be homogeneous within each segment in order to build appropriate models. First, institutions should analyse the representativeness of the data and pay particular attention to the consistency of obligor characteristics, industry, size and lending standards. The existence of material industry bias in data samples should result in the creation of a rating model specific to that industry. Second, the obligor sample size should be sufficient to meet minimum statistical performance. Third, definition of default employed to identify default events should also be homogeneous across the data sample.

2.5 Default Definition

2.5.1

Institutions should define and document two definitions of default, employed in two different contexts: (i) for the purpose of rating model development and (ii) for the purpose of estimating and calibrating probabilities of defaults. These two definitions of default can be identical or different, if necessary. The scope of these definitions should cover all credit facilities and all business segments of the institution. In this process, institutions should apply the following principles.

2.5.2

For rating models: The definition of default in the context of a rating model is a choice made to achieve a meaningful discrimination between performing and non-performing obligors (or facilities). The terminology ‘good’ and ‘bad’ obligors is sometimes employed by practitioners in the context of this discrimination. Institutions should define explicitly the definition of default used as the dependent variable when building their rating models.

(i)

This choice should be guided by modelling considerations, not by accounting considerations. The level of conservatism embedded in the definition of default used to develop rating models has no direct impact on the institution’s P&L. It simply supports a better identification of customers unlikely to perform. (ii)

Consequently, institutions can choose amongst several criteria to identify default events in order to maximise the discriminatory power of their rating models. This choice should be made within boundaries. At a minimum, they should rely on the concept of days-past-due (“DPD”). An obligor should be considered in default if its DPD since the last payment due is greater or equal to 90 or if it is identified as defaulted by the risk management function of the institution. (iii)

If deemed necessary, institutions can use more conservative DPD thresholds in order to increase the predictive power of rating models. For low default portfolios, institutions are encouraged to use lower thresholds, such as 60 days in order to capture more default events. In certain circumstances, restructuring events can also be included to test the power of the model to identify early credit events.

2.5.3

For PD estimation: The definition of default in the context of PD estimation has direct financial implications through provisions, capital assessment and pricing.

(i)

This choice should be guided by accounting and regulatory principles. The objective is to define this event in such a way that it reflects the cost borne by institutions upon the default of an obligor. (ii)

For that purpose, institutions should employ the definition of default articulated in the CBUAE Credit Risk Regulation, separately from the MMS and MMG. As the regulation evolves, institutions should update the definition employed for modelling and recalibrate their models.

2.6 Rating Scale

2.6.1

Rating models generally produce an ordinal indicator on a predefined scale representing creditworthiness. The scores produced by each models should be mapped to a fixed internal rating scale employed across all aspects of credit risk management, in particular for portfolio management, provision estimation and capital assessment. The rating scale should be the result of explicit choices that should be made as part of the model governance framework outlined in the MMS. At a minimum, the institution’s master rating scale should comply with the below principles:

(i)

The granularity of the scale should be carefully defined in order to support credit risk management appropriately. An appropriate balance should be found regarding the number of grades. A number of buckets that is too small will reduce the accuracy of decision making. A number of buckets that is too large will provide a false sense of accuracy and could be difficult to use for modelling. (ii)

Institutions should ensure that the distribution of obligors (or exposures) spans across most rating buckets. High concentration in specific grades should be avoided, or conversely the usage of too many grades with no obligors should also be avoided. Consequently, institution may need to redefine their rating grades differently from rating agencies’ grades, by expanding or grouping certain grades. (iii)

The number of buckets should be chosen in such a way that the obligors’ probability of default in each grade can be robustly estimated (as per the next section on PD models). (iv)

The rating scale from external rating agencies may be used as a benchmark, however their granularity may not be the most appropriate for a given institution. Institutions with a large proportion of their portfolio in non-investment grade rating buckets should pay particular attention to bucketing choices. They are likely to require more granular buckets in this portion of the scale to assess their risk more precisely than with standard scales from rating agencies. (v)

The choice of an institution’s rating scale should be substantiated and documented. The suitability of rating scale should be assessed on a regular basis as part of model validation.

2.7 Model Construction

2.7.1

The objective of this section is to draw attention to the key challenges and minimum expected practices to ensure that institutions develop effective rating models. The development of retail scorecards is a standardised process that all institutions are expected to understand and implement appropriately on large amounts of data. Wholesale rating models tend to be more challenging due to smaller population sizes and the complexity of the factors driving defaults. Consequently, this section related to model construction focuses on wholesale rating models.

2.7.2

Wholesale rating models should incorporate, at a minimum, financial information and qualitative inputs. The development process should include a univariate analysis and a multivariate analysis, both fully documented. All models should be constructed based on a development sample and tested on a separate validation sample. If this is not possible in the case of data scarcity, the approach should be justified and approved by the validator.

2.7.3

Quantitative factors: These are characteristics of the obligors that can be assessed quantitatively, most of which are financial variables. For wholesale rating models, institutions should ensure that the creation of financial ratios and subsequent variable transformations are rigorously performed and clearly documented. The financial variables should be designed to capture the risk profile of obligors and their associated financing. For instance, the following categories of financial ratios are commonly used to assess the risk of corporate lending: operating performance, operating efficiency, liquidity, capital structure, and debt service.

2.7.4

Qualitative subjective factors: These are characteristics of the obligor that are not easily assessed quantitatively, for instance the experience of management or the dependency of the obligors on its suppliers. The following categories of subjective factors are commonly used to assess the risk of corporate lending: industry performance, business characteristics and performance, management character and experience, and quality of financial reporting and reliability of auditors. The assessment of these factors is generally achieved via bucketing that relies on experts’ judgement. When using such qualitative factors, the following principles should apply:

(i)

Institutions should ensure that this assessment is based upon a rigorous governance process. The collection of opinions and views from experienced credit officers should be treated as a formal data collection process. The data should be subject to quality control. Erroneous data points should also be removed. (ii)

If the qualitative subjective factors are employed to adjust the outcome of the quantitative factors, institutions should control and limit this adjustment. Institutions should demonstrate that the weights given to the expert-judgement section of the model is appropriate. Institutions should not perform undue rating overrides with expert judgement.

2.7.5

Univariate analysis: In the context of rating model development, this step involves assessing the discriminatory power of each quantitative factor independently and assessing the degree of correlation between these quantitative factors.

(i)

The assessment of the discriminatory power should rely on clearly defined metrics, such as the accuracy ratio (or Gini coefficient). Variables that display no relationship or counterintuitive relationships with default rates should preferably be excluded. They can be included in the model only after a rigorous documentation of the rationale supporting their inclusion. (ii)

Univariate analysis should also involve an estimation of the correlations between the quantitative factors with the aim to avoid multicolinearity in the next step of the development. (iii)

The factors should be ranked according to their discriminatory power. The development team should comment on whether the observed relationship is meeting economic and business expectations.

2.7.6

Multivariate analysis: This step involves establishing a link between observed defaults and the most powerful factors identified during the univariate analysis.

(i)

Common modelling techniques include, amongst others, logistic regressions and neural networks. Institutions can chose amongst several methodologies, provided that the approach is fully understood and documented internally. This is particularly relevant if third party consultants are involved. (ii)

Institutions should articulate clearly the modelling technique employed and the process of model selection. When constructing and choosing the most appropriate model, institutions should pay attention to the following:

(a)

The number of variables in the model should be chosen to ensure a right balance. An insufficient number of variables can lead to a sub-optimal model with a weak discriminatory power. An excessive number of variables can lead to overfitting during the development phase, which will result in weak performance subsequently. (b)

The variables should not be too correlated. Each financial ratio should preferably be different in substance. If similar ratios are included, a justification should be provided and overfitting should be avoided. (c)

In the case of bucketing of financial ratios, the defined cut-offs should be based on relevant peer comparisons supported by data analysis, not arbitrarily decided.

2.8 Model Documentation

2.8.1

Rigorous documentation should be produced for each rating model as explained in the MMS. The documentation should be sufficiently detailed to ensure that the model can be fully understood and validated by any independent party.

2.8.2

In addition to the elements articulated in the MMS, the following components should be included:

(i)

Dates: The model development date and implementation date should be explicitly mentioned in all rating model documentation. (ii)

Materiality: The model materiality should be quantified, for instance as the number of rated customers and their total corresponding gross exposure. (iii)

Overview: An executive summary with the model rating strategy, the expected usage, an overview of the model structure and the data set employed to develop and test the model. (iv)

Key modelling choices: The default definition, the rating scale and a justification of the chosen segmentation as explained in earlier sections. (v)

Data: A description of the data employed for development and testing, covering the data sources and the time span covered. The cleaning process should be explained including the filter waterfall and/or any other adjustments used. (vi)

Methodology: The development approach covering the modelling choices, the assumptions, limits, the parameter estimation technique. Univariate and multivariate analyses discussing in detail the construction of factors, their transformation and their selection. (vii)

Expert judgement inputs: All choices supporting the qualitative factors. Any adjustments made to the variables or the model based on expert opinions. Any contributions from consulted parties. (viii)

Validation: Details of testing and validation performed during the development phase or immediately after.

2.9 Usage of Rating Models

2.9.1

Upon the roll-out of a new rating model and/or a newly recalibrated (optimised) rating model, institutions should update client ratings as soon as possible. Institutions should assign new ratings with the new model to 70% of the existing obligors (entering the model scope) within six (6) months and to 95% of the existing obligors within nine (9) months. The assignment of new ratings should be based on financials that have been updated since the issuance of the previous rating, if they exist. Otherwise prior financials should be used. This expectation applies to wholesale and retail models.

2.9.2

In order to achieve this client re-rating exercise in a short timeframe, institutions are expected to rate clients in batches, performed by a team of rating experts, independently from actual, potential or perceived business line interests.

2.9.3

Institutions should put in place a process to monitor the usage of rating models. At a minimum, they should demonstrate that the following principles are met:

(i)

All ratings should be archived with a date that reflects the last rating update. This data should be stored in a secure database destined to be employed on a regular basis to manage the usage of rating models. (ii)

The frequency of rating assignment should be tracked and reported to ensure that all obligors are rated appropriately in a timely fashion. (iii)

Each rating model should be employed on the appropriate type of obligor defined in the model documentation. For instance, a model designed to assess large corporates should not be used to assess small enterprises. (iv)

Institutions should ensure that the individuals assigning and reviewing ratings are suitably trained and can demonstrate a robust understanding of the rating models. (v)

If the ratings are assigned by the business lines, these should be reviewed and independently signed-off by the credit department to ensure that the estimation of ratings is unbiased from short term potential or perceived business line interests.

2.10 Rating Override

2.10.1

In the context of the MMG, rating override means rating upgrade or rating downgrade. Overrides are permitted; however, they should follow a rigorously documented process. This process should include a clear governance mirroring the credit approval process based on obligor type and exposure materiality. The decision to override should be controlled by limits expressed in terms of number of notches and number of times a rating can be overridden. Eligibility criteria and the causes for override should be clearly documented. Causes may include, amongst others: (i) events specific to an obligor, (ii) systemic events in a given industry or region, and/or (iii) changes of a variable that is not included in the model.

2.10.2

Rating overrides should be monitored and be included in the model validation process. The validator should estimate the frequency of overrides and the number of notches between the modelled rating and the new rating. A convenient approach to monitor overrides is to produce an override matrix.

2.10.3

In some circumstances, the rating of a foreign obligor should not be better than the rating of its country of incorporation. Such override decision should be justified and documented.

2.10.4

A contractual guarantee of a parent company can potentially result in the rating enhancement of an obligor, but conditions apply:

(i)

The treatment of parental support for a rating enhancement should be recognised only based on the production of an independent legal opinion confirming the enforceability of the guarantee upon default. The rating enhancement should only apply to the specific facility benefiting from the guarantee. The process for rating enhancement should be clearly documented. For the avoidance of doubt, a sole letter of intent from the parent company should not be considered as a guarantee for enforceability purpose. A formal legal guarantee is the only acceptable documentation. (ii)

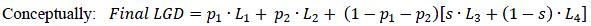

For modelling purpose, an eligible parent guarantee can be reflected in the PD or the LGD of the facility benefiting from this guarantee. If the rating of the facility is enhanced, then the guarantee will logically be reflected in the PD parameter. If the rating of the obligor is not enhanced but the guarantee is deed eligible, then the guarantee can be reflected in the LGD parameter. The rationale behind such choice should be fully documented.

2.11 Monitoring and Validation

2.11.1

Institutions should demonstrate that their rating models are performing over time. All rating models should be monitored on a regular basis and independently validated according to all the principles articulated in the MMS. For that purpose, institutions should establish a list of metrics to estimate the performance and stability of models and compare these metrics against pre-defined limits.

2.11.2

The choice of metrics to validate rating models should be made carefully. These metrics should be sufficiently granular and capture performance through time. It is highly recommended to estimate the change in the model discriminatory power through time, for instance by considering a maximum acceptable drop in accuracy ratio.

2.11.3

In addition to the requirement articulated in the MMS related to the validation step, for rating models in particular, institutions should ensure that validation exercises include the following components:

(i)

Development data: A review of the data collection and filtering process performed during the development phase and/or the last recalibration. In particular, this should cover the definition of default and data quality. (ii)

Model usage: A review of the governance surrounding model usage. In particular, the validator should comment on (a) the frequency of rating issuance, (b) the governance of rating production, and (c) the variability of ratings produced by the model. The validator should also liaise with the credit department to form a view on (d) the quality of financial inputs and (e) the consistency of the subjective inputs and the presence of potential bias. (iii)

Rating override: A review of rating overrides. This point does not apply to newly developed models. (iv)

Model design: A description of the model design and its mathematical formulation. A view on the appropriateness of the design, the choice of factors and their transformations. (v)

Key assumptions: A review of the appropriateness of the key assumptions, including the default definition, the segmentation and the rating scale employed when developing the model. (vi) Validation data: The description of the data set employed for validation. (vii)

Quantitative review: An analysis of the key quantitative indicators covering, at a minimum, the model stability, discriminatory power, sensitivity and calibration. This analysis should cover the predictive power of each quantitative and subjective factor driving the rating. (viii)

Documentation: A review on the quality of the documentation surrounding the development phase and the modelling decisions. (ix)

Suggestions: When deemed appropriate, the validator can make suggestions for defect remediation to be considered by the development team.

3 PD Models

3.1 Scope

3.1.1

The majority of institutions employ models to estimate the probability of default of their obligors (or facilities), for risk management purpose and to comply with accounting and regulatory requirements. These models are generally referred to as ‘PD models’, although this broad definition covers several types of models. For the purpose of the MMG, and to ensure appropriate model management, the following components should be considered as separate models:

(i) Rating-to-PD mapping models, and (ii)

Point-in-Time PD Term Structure models.

3.1.2

These models have implications for key decisions including, but not limited to, risk management, provisioning, pricing, capital allocation and Pillar II capital assessment. Institutions should manage these models through a complete life-cycle process in line with the requirements articulated in the MMS. In particular, the development, ownership and validation process should be clearly organised and documented.

3.2 Key Definitions and Interpretations

3.2.1

The following definitions are complementing the definitions provided at the beginning of the MMG. The probability of default of a borrower or of a facility is noted “PD”. The loss proportion of exposure arising after default, or “loss given default” is noted “LGD”.

3.2.2

A point-in-time assessment (“PIT”) refers to the value of a metric (typically PD or LGD) that incorporates the current economic conditions. This contrasts with a through-the-cycle assessment (“TTC”) that refers to the value of the same metric across a period covering one or several economic cycles.

3.2.3

A PD is associated with a specific time horizon, which means that the probability of default is computed over a given period. A 1-year PD refers to the PD over a one year period, starting today or at any point in the future. A PD Term Structure refers to a cumulative PD over several years (generally starting at the portfolio estimation date). This contrasts with a marginal forward 1-year PD, which refers to a PD starting at some point in the future and covering a one year period, provided that the obligor has survived until that point.

3.2.4

A rating transition matrix is a square matrix that gives the probabilities to migrate from a rating to another rating. This probability is expressed over a specific time horizon, typically one year, in which case we refer to a ‘one-year transition matrix’. Transitions can also be expressed over several years.

3.3 Default Rate Estimation

3.3.1

Prior to engaging in modelling, institutions should implement a robust process to compute time series of historical default rates, for all portfolios where data is available. The results should be transparent and documented. This process should be governed and approved by the Model Oversight Committee. Once estimated, historical default rates time series should only be subject to minimal changes. Any retroactive updates should be approved by the Model Oversight Committee and by the bank’s risk management committee.

3.3.2

Institutions should estimate default rates at several levels of granularity: (i) for each portfolio, defined by obligor type or product, and (ii) for each rating grade within each portfolio, where possible. In certain circumstances, default rate estimation at rating grade level may not be possible and institutions may only rely on pool level estimation. In this case, institutions should justify their approach by demonstrating clear evidence based on data, that grade level estimation is not deemed sufficiently robust.

3.3.3

Institutions should compute the following default ratio, based on the default definition described in the previous section. This ratio should be computed with an observation window of 12 months to ensure comparability across portfolios and institutions. In addition, institutions are free estimate this ratio for other windows (e.g. quarterly) for specific modelling purposes.

(i)

The denominator is composed of performing obligors with any credit obligation, including off and on balance sheet facilities, at the start of the observation window. (ii) The numerator is composed of obligors that defaulted at least once during the observation window, on the same scope of facilities.

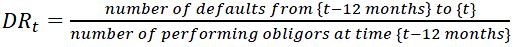

Formally the default rate can be expressed as shown by the formula below, where t represented the date of estimation. Notice that if the ratio is reported at time t, then the ratio is expressed as a backward looking metrics. This concept is particularly relevant for the construction of macro models as presented in subsequent sections. The frequency of computation should be at least quarterly and possibly monthly for some portfolios.

3.3.4

When the default rate is computed by rating grade, the denominator should refer to all performing obligors assigned to a rating grade at the beginning of the observation window. When the default rate is computed at segment level, the denominator should refer to all performing obligors assigned to that segment at the beginning of the observation window.

3.3.5

For wholesale portfolios, this ratio should be computed in order to obtain quarterly observations over long time periods covering one or several economic cycles. For wholesale portfolios, institutions should aim to gather at least 5 years of data, and preferably longer. For retail portfolios or for portfolios with frequent changes in product offerings, the period covered may be shorter, but justification should be provided.

3.3.6

Institutions should ensure that default time series are homogeneous and consistent through time, i.e. relate to a portfolio with similar characteristics, consistent lending standards and consistent definition of default. Adjustments may be necessary to build time series representative of the institution current portfolios. Particular attention should be given to changes in the institution’s business model through time. This is relevant is the case of rapidly growing portfolios or, conversely, in the case of portfolio run-off strategies. This point is further explained in the MMG section focusing on macro models.

3.3.7

If an obligor migrates between ratings or between segments during the observation period, the obligor should be included in the original rating bucket and/or original segment for the purpose of estimating a default rate. Institutions should document any changes in portfolio segmentation that occurred during the period of observation.

3.3.8

When the default rate is computed by rating grade, the ratings at the beginning of the observation window should not reflect risk transfers or any form of parent guaranties, in order to capture the default rates pertaining to the original creditworthiness of the obligors. The ratings at the start of the observation window can reflect rating overrides if these overrides relate to the obligors themselves, independently of guarantees.

3.3.9

When default rate series are computed over long time periods, it could happen that obligors come out of their default status after a recovery and a cure period. In subsequent observation windows, such obligors could be performing again and default again, in which case another default event should be recorded. For that purpose, institutions should define minimum cure periods per product and/or portfolio type. If a second default occurs after the end of the cure period, it should be recorded as an addition default event. These cure periods should be based on patterns observed in data sets.

3.3.10

Provided that institutions follow the above practices, the following aspects remain subject to the discretion of each institution. First, they may choose to exclude obligors with only undrawn facilities from the numerator and denominator to avoid lowering unduly the default rate of obligors with drawn credit lines. Second, institutions may also choose to estimate default rates based on exposures rather than on counts of obligors; such estimation provides additional statistical information on expected exposures at default.

3.4 Rating-to-PD

3.4.1

For the purpose of risk management, the majority of institutions employ a dedicated methodology to estimate a TTC PD associated with each portfolio and, where possible, associated with each rating grade (or score) generated by their rating models. This estimation is based on the historical default rates previously computed, such that the TTC PD reflects the institution’s experience.

3.4.2

This process results in the construction of a PD scale, whereby the rating grades (or scores) are mapped to a single PD master scale, often common across several portfolios. This mapping exercise is referred to as ‘PD calibration’. It relies on assumptions and methodological choices separate from the rating model, therefore it is recommended to considered such mapping as a standalone model. This choice is left to each institution and should be justified. The approach should be tested, documented and validated.

3.4.3

Institutions should demonstrate that the granularity of segmentation employed for PD modelling is an appropriate reflection of the risk profile of their current loan book. The segmentation granularity of PD models should be based on the segmentation of rating models. In other words, the segmentation of rating models should be used as a starting point, from which segments can be grouped or split further depending on portfolio features, provided it is fully justified. This modelling choice has material consequences on the quality of PD models; therefore, it should be documented and approved by the Model Oversight Committee. Finally, the choice of PD model granularity should be formally reviewed as part of the validation process.

3.4.4

The rating-to-PD mapping should be understood as a relationship in either direction since no causal relationship is involved. The volatility of the grade PD through time depends on the sensitivity of the rating model and on the rating methodology employed. Such volatility will arise from a combination of migrations across rating grades and changes in the DR observed for each grade. Two situations can arise:

(i)

If rating models are sensitive to economic conditions, ratings will change and the exposures will migrate across grades, while the DR will remain stable within each grade. In this case, client ratings will change and the TTC PD assigned to each rating bucket will remain stable. (ii)

If rating models are not sensitive to economic conditions, then the exposures will not migrate much through grades but the DR of each grade will change. In this case, client ratings will remain stable but the observed DR will deviate from the TTC PD assigned to each rating bucket.

3.4.5

Institutions should estimate the degree to which they are exposed to each of the situations above. Institutions are encouraged to favour the first situation, i.e. implement rating models that are sensitive to economic conditions, favour rating migrations and keep the 1-year TTC PD assigned to each rating relatively stable. For the purpose of provisioning, economic conditions should be reflected in the PIT PD in subsequent modelling. Deviation from this practice is possible but should be justified and documented.

3.4.6

The estimation of TTC PD relies on a set of principles that have been long established in the financial industry. At a minimum, institutions should ensure that they cover the following aspects:

(i)

The TTC PD associated with each portfolio or grade should be the long-run average estimation of the 1-year default rates for each corresponding portfolio or grade. (ii)

The DR time series should be homogeneous and consistent through time, i.e. relate to a portfolio with similar characteristics and grading method. (iii)

TTC PDs should incorporate an appropriate margin of conservatism depending on the time span covered and the population size. (iv)

TTC PDs should be estimated over a minimum of five (5) years and preferably longer for wholesale portfolios. For retail portfolios, changes in product offerings should be taken into account when computing TTC PD. (v)

The period employed for this estimation should cover at least one of the recent economic cycles in the UAE: (i) the aftermath of the 2008 financial crisis, (ii) the 2015-2016 economic slowdown after a sharp drop in oil price, and/or (iii) the Covid-19 crisis. (vi)

If the estimation period includes too many years of economic expansion or economic downturn, the TTD PD should be adjusted accordingly.

3.4.7

For low default portfolios, institutions should employ a separate approach to estimate PDs. They should identify an appropriate methodology suitable to the risk profile of their portfolio. It is recommended to refer to common methods proposed by practitioners and academics to address this question. Amongst others, the Pluto & Tasche method or the Benjamin, Cathcart and Ryan method (BCR) are suitable candidates.

3.4.8

For portfolios that are externally rated by rating agencies, institutions can use the associated TTC PDs provided by rating agencies. However, institutions should demonstrate that (i) they do not have sufficient observed DR internally to estimate TTC PDs, (ii) each TTC PD is based on a blended estimation across the data provided by several rating agencies, (iii) the external data is regularly updated to include new publications from rating agencies, and (iv) the decision to use external ratings and PDs is reconsidered by the Model Oversight Committee on a regular basis.

3.5 PIT PD and PD Terms Structure

3.5.1

Modelling choices surrounding PIT PD and PD term structure have material consequences on the estimation of provisions and subsequent management decisions. Several methodologies exist with benefits and drawbacks. The choice of methodology is often the result of a compromise between several dimensions, including but not limited to: (i) rating granularity, (ii) time step granularity and (iii) obligor segmentation granularity. It is generally challenging to produce PD term structures with full granularity in all dimensions. Often, one or two dimensions have to be reduced, i.e. simplified.

3.5.2

Institutions should be aware of this trade-off and should choose the most appropriate method according to the size and risk profile of their books. The suitability of a methodology should be reviewed as part of the validation process. The methodology employed can change with evolving portfolios, risk drivers and modelling techniques. This modelling choice should be substantiated, documented and approved by the Model Oversight Committee. Modelling suggestions made by third party consultants should also be reviewed through a robust governance process.

3.5.3

For the purpose of the MMG, minimum expected practices are articulated for the following common methods. Other methodologies exist and are employed by practitioners. Institutions are encouraged to make research and consider several approaches.

(i) The transition matrix approach, (ii) The portfolio average approach, and (iii)

The Vasicek credit framework.

3.5.4

Irrespective of the modelling approach, institutions should ensure that the results produced by models meet business sense and economic intuition. This is particularly true when using sophisticated modelling techniques. Ultimately, the transformation and the adjustment of data should lead to forecasted PDs that are coherent with the historical default rates experienced by the institution. Deviations should be clearly explained.

3.6 PIT PD with Transition Matrices

3.6.1

This section applies to institutions choosing to use transition matrices as a methodology to model PD term structures.

3.6.2

Transition matrices are convenient tools; however, institutions should be aware of their theoretical limitations and practical challenges. Their design and estimation should follow the decision process outlined in the MMS. Institutions should assess the suitability of this methodology vs. other possible options as part of the model development process. If a third party consultant suggests using transition matrices as a modelling option, institutions should ensure that sufficient analysis is performed, documented and communicated to the Model Oversight Committee prior to choosing such modelling path.

3.6.3

One of the downsides of using transition matrices is the excessive generalization and the lack of industry granularity. To obtain robust matrices, pools of data are often created with obligors from various background (industry, geography and size). This reduces the accuracy of the PD prediction across these dimensions. Consequently, institutions should analyse and document the implications of this dimensionality reduction.

3.6.4

The construction of the TTC matrix should meet a set of properties, that should be clearly defined in advance by the institution. The matrix should be based on the institution’s internal data as it is not recommended to use external data for this purpose. If an institution does not have sufficient internal data to construct a transition matrix, or if the matrix does not meet the following properties, then other methodologies should be considered to develop PD term structures.

3.6.5

At a minimum, the following properties should be analysed, understood and documented:

(i)

Matrix robustness: Enough data should be available to ensure a robust estimation of each rating transition point. Large confidence intervals around each transition probabilities should be avoided. Consequently, institutions should estimate and document these confidence intervals as part of the model development phase. These should be reviewed as part of the model validation phase. (ii)

Matrix size: The size of the transition matrix should be chosen carefully as for the size of a rating scale. A number of buckets that is too small will reduce the accuracy of decision making. A number of buckets that is too large will lead to an unstable matrix and provide a false sense of accuracy. Generally, it is recommended to reduce the size of the transition matrix compared to the full rating scale of the institution. In this case, a suitable interpolation method should be created as a bridge from the reduced matrix size, back to the full rating scale. (iii)

Matrix estimation method: Amongst others, two estimation methods are commonly employed; the cohort approach and the generator approach. The method of choice should be tested, documented and reviewed as part of the model validation process. (iv)

Matrix smoothing: Several inconsistencies often occur in transition matrices, for instance (a) transition probabilities can be zero in some rating buckets, and/or (b) the transition distributions for a given origination rating can be bi-modal. Institutions should ensure that the transition matrix respect Markovian properties.

3.6.6

If the institution decides to proceed with the transition matrix appraoch, the modelling approach should be clearly articulated as a clear sequence of steps to ensure transparency in the decision process. At a minimum, the following sequence should be present in the modelling documentation. The MMG does not intend to elaborate on the exact methodology of each step. Rather, the MMG intends to draw attention to modelling challenges and set minimum expected practices as follows:

(i)

TTC transition matrix: The first step is the estimation of a TTC matrix that meets the properties detailed in the previous article. (ii)

Credit Index: The second step is the construction a Credit Index (“CI”) reflecting appropriately the difference between the observed PIT DR and TTC DR (after probit or logit transformation). The CI should be coherent with the TTC transition matrix. This means that the volatility of the CI should reflect the volatility of the transition matrix through time. For that purpose the CI and the TTC transition matrix should be based on the same data. If not, justification should be provided. (iii)

Forecasted CI: The third step involves forecasting the CI with a macroeconomic model. However, a segmentation issue often arises. If the matrix was created by pooling obligors from several segments, then only one blended CI will be estimated. This may be insufficient to capture the relationship between macroeconomic variables and the creditworthiness of obligors at segment level for the purpose of PIT modelling. Institutions should be cognisant of such limitation and provide solutions to overcome it. An option is to adjust the blended forecasted CI to create several granular CIs that would reflect the behaviour of each segment. (iv)

Adjusted transition matrix: The fourth step is the development of a mechanism to adjust the TTC transition matrix with the forecasted CI or the adjusted CIs. Several forward PIT transition matrices should be obtained at several points in the future. (v)

PD term structure: Finally, a PD term structure should be created based on the forward PIT transition matrices. Methodologies based on matrix multiplication techniques should be robust and consistently applied.

3.6.7

As part of the development process, several pre-implementation validation checks should be performed on the TTC transition matrix in order to verify that the above properties are met. In addition, for each segment being modelled, the matrix should be constructed such that two logical properties are met by the PD outputs:

(i)

The weighted average TTC PD based on the default column of the TTC transition matrix should be reasonably close to the long term default rate of the obligors from the same segment(s) employed to build the matrix. (ii)

The weighted average PIT PD based on the default column of the PIT transition matrix for the next few time steps, should be coherent with the current default rate of the obligors from the same segment(s) employed to build the matrix or the segment(s) employed to derived the adjusted CIs.

3.7 Portfolio Scaling Approach

3.7.1

This section applies to institutions using a portfolio-level scaling approach to model the term structure of PIT PD. This approach is simpler to implement than transition matrices and is suitable for portfolios with smaller data sets. In this method, average PD across ratings are being modelled, instead of all transition points between ratings. This approach tends to be preferred for smaller segments. The obligor segmentation granularity is preserved at the expense of a simplification of the rating granularity.

3.7.2

In order to ensure transparency in the decision process, the modelling approach should be clearly articulated as a clear sequence of steps. It is not the object of the MMG to elaborate on the exact methodology of each step. Rather, the MMG intends to draw attention to modelling challenges and set minimum expected practices. At a minimum, the following sequence should be present in the modelling documentation:

(i) Forecast portfolio average PIT PD per segment based on macro-PD models. (ii) Estimate the deviation of the portfolio PIT PD from its long term average PD. (iii)

Apply this deviation to PDs at lower granularity levels, for instance pools or rating grades. This can be achieved by scaling in logit or probit space. (iv)

Construct a PIT PD term structure. It is highly recommended to compare several methods and test their impacts on PD outcomes and risk metrics.

3.7.3

The drawback of this method is the generalisation of the PD volatility across grades (or pools) with the use of scalers. Certain rating grades are more prone to volatility than others, which is not reflected in this type of model. Therefore this method could result in an underestimation of the PIT PD of the lowest rating grades. Consequently, institutions should demonstrate that they assess and understand this effect.

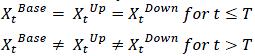

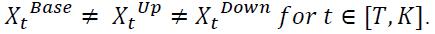

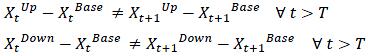

3.7.4